What is a Chatbot?

So this is my first blog post (I mean first ever blog post). It's about time I started writing about my experiences over the last 15 or so years of making chatbots, so here goes. I'll start at the beginning, with "what is a chatbot?"

I'm not going to go into the history, but instead discuss the components that typically make up a chatbot. This is from the perspective of using the Rasa chatbot as our primary platform, but also applies to other platforms

A chatbot is a computer application designed to converse with another party, usually a human, with the aim to provide a useful or entertaining experience. This could be anything from a customer support system that answers questions about resetting passwords, to a marketing chatbot that proactively tries to market a new movie.

This communication can occur via a graphical user interface (e.g. Facebook Messenger or on a website), SMS, or a phone call. Either way, the core technology is the same; a chatbot receives a message from a user and attempts to respond based on the current conversation state and any contextual information available.

Different Types of Chatbot

Not all chatbots are built equally, so let’s go through some common types. Each can be thought of as an extension of the former (it’s more of a spectrum than distinct types).

The simple QA bot

The simplest type of chatbot, able to understand basic questions and respond with FAQ-style canned responses. Generally there isn’t much in the way of dialogue (i.e. back and forths, confirmation steps, context handling, etc.) and the focus of these systems is often to provide customer support and issue resolution rather than have a conversational experience.

A common issue here is the temptation to take static FAQs from a website and simply transfer them into a chatbot, hoping for a good experience to emerge. In my experience this is hopeful at best. However, if you create good content and cover the top asked questions, you can make a significant impact on customer service costs. This is where people often start when creating a chatbot, and might be considered the first phase of a typical project.

The QA bot with follow on options

An extension of the above is the ability to have multi-step interactions for particular questions, where the chatbot needs to ask clarification questions or collect information from the user. Here we’re still effectively building a chatbot based on a list of questions, but some of those questions take the user into a structured flow where they click buttons and enter well-defined data to progress the “conversation” and solve their issue.

The main difference here is that the chatbot is stateful (i.e. the chatbot knows the current state of the conversation and details of previous transactions) and can respond based on this context.

The Personalised Bot

This chatbot aims to provide a customised experience for each user based on data we know about them. This could be simple data like a user’s name or age, or things like recently purchased products, their favourite movie or even whether they are a dog or a cat person. A personalised chatbot can then use this data in responses or to steer the conversation in a particular direction.

The user data might come from a variety of places, such as the user’s profile (if logged in), entities extracted from user messages, external information, etc. Any data such as this is generally thought of as a contextual variable, i.e. we are building context so we can provide a more specific and personalised experience.

The Conversational Bot

This chatbot can converse in a similar way to a human, dynamically handling different topics and side questions, all while managing the broader objectives (i.e. staying on track) and providing a personalised experience. Many would say this kind of chatbot doesn’t really exist yet, at least not at scale across all conversations. Considering that every user chat is different; one user might have a great and seemingly “conversational” experience, while another user might not have their questions answered and the experience falls apart.

A common issue with conversational chatbots is the amount of content required to respond to all the various user questions in all the various contexts. The more conversational, the more content you will generally need to manage. Unless you have a way of generating the required content in a more automated fashion, is a truly conversational chatbot really achievable and manageable? Some might say that a chatbot doesn’t need to be truly conversational, it just needs to solve a problem, so perhaps there is some middle ground.

Under the Hood

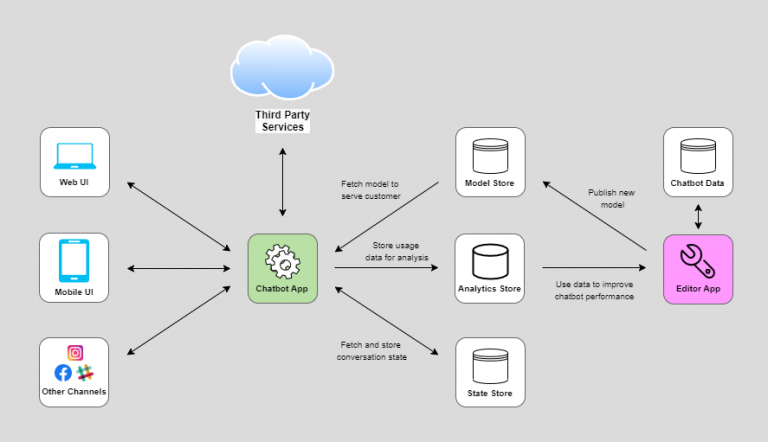

Let’s take a high-level view on what a typical chatbot implementation might look like. Here’s a list of high level components:

- User Interface: Where the user interacts with the chatbot, such as a website chat interface, Facebook Messenger or via a telephone/voice conversation.

- Chatbot App: The runtime service that powers the chatbot conversation and serves end users, often in the form of a web service (e.g. a Rest API).

- Editor App: Where we create and edit content/assets for the chatbot. This is usually some form of multi-user online application where you can manage all aspects of the chatbot, from creation and testing through to publishing and analysis.

- Chatbot Data Store: Where we store the data (intents, entities, response content, etc) created in our Editor App.

- State Store: Where we store the current state of all active conversations with users.

- Model Store: Where we store the data for each published version of our chatbot (aka our “model”, or “publish file”). This file includes all data required for the chatbot to operate, such as the machine learning classifier, rules and responses. When we publish from our Editor App we are creating a new model and storing it in our Model Store. The Chatbot App can then fetch this model and use it to serve users.

- Analytics Store: Where we store chatbot usage data, such as intents matched, sentiment, whether the user has escalated to a human, or any other business intelligence data relating to the user conversation.

Typical Flow for a User Question

- User Interface receives a user question.

- Question is passed to the Chatbot App.

- Chatbot App fetches conversation state for this user from State Store.

- Chatbot App uses question and conversation state to decide on the best response.

- Chatbot App sends response to User Interface for presentation to the user.

- Chatbot App stores updated conversation state to State Store.

- Transaction data is written to the Analytics Store for later analysis.

Inside the Chatbot Engine

How does a chatbot process a user’s message? Most break it down into two parts; understanding the user message and coming up with a response. Let’s go through each part.

Understanding the User Message

Generally referred to as NLU (Natural Language Understanding), this is where we take the user message (e.g. “I want a mobile phone with at least a 7 inch screen”) and look to extract the general intent of the user (e.g. “making a purchase”), and any associated information (e.g. a “mobile phone” product with a minimum screen size of “7” inches). We’re essentially trying to find structured meaning in the user message that a computer program can use to find the best response. The actual output of the NLU process might look as follows:

- intent: “make_purchase”

- entities:

- product: “mobile_phone”

- min_screen_size: 7

Response Selection and Generation

Once we have an understanding of the user message we can start the process of choosing an appropriate response. The chatbot needs to take into account a few things here, such as:

- The user’s question and extracted intent/entities.

- The conversation state and whether the user has just been prompted for information or a specific context has been set. I.e. if the chatbot has just prompted the user for their favourite movie then we likely need to respond in context on the next transaction and match/extract the movie if possible.

- Current contextual variables such as whether the user is logged in or what products they have purchased, for example.

- Topics discussed in previous transactions.

Deciding on the next conversation step or action is also referred to as Dialogue Management, and the specifics of how this is implemented is largely dependent on the chatbot platform being used. But typical approaches to dialogue management are as follows:

- Simple Question and Answer: This is the most simple mechanism used in a chatbot and just means that if a particular intent is matched we should give a particular answer or action. I almost didn't mention this one because it's so basic, but technically it is a component of dialogue management, albeit one that occurs within a single transaction.

- Finite State Machine: This sounds pretty cool but what we're really talking about here is a flow chart detailing how to respond to a particular issue, with all the various steps and clarifications. As the conversation progresses the chatbot moves through the flowchart from node to node (i.e. each node is a "finite state"), prompting the user for input as it goes. This can be a reliable approach but can also get complicated as you account for lots of contextual follow ons.

- Frame Based: A frame based approach is less about a specific path, and more about fulfilling objectives. To solve a user issue we often need to know certain information before providing a solution. For example, if a user is ordering a pizza we might need to know the size, the toppings and perhaps the user's dietary requirements. A Frame Based approach involves asking for each piece of information in succession using predefined prompts. Once all the data is obtained the chatbot can execute an action and respond accordingly. The nice thing about this approach is the dynamic ability to extract information without a fixed path. The user can provide information in separate steps or all at once, and the chatbot only ever asks for information it doesn't already know.

- Context Labels and Rules: This approach involves assigning context/topic labels to intents and responses (e.g. tags relating to what the conversation is currently about, such as 'smalltalk' or 'movies'). This then allows follow up rules and content in relation to the current context labels set in previous transactions. This essentially allows the chatbot to stay on topic, but is painful to manage.

- Probabilistic: This is where we would typically rely on machine learning to provide the best next action or response. Essentially this is a classifier that's been trained on good examples of conversations and tries best to replicate them with real users. The theory is that the chatbot will follow these good examples while also 'generalizing' to allow other "good" conversations not accounted for in the training data. This approach has its risks but with good training data coverage can be pretty effective and create more "human" and conversational experiences. Just don't expect immediate results.

Most platforms are based on the Frame-based approach to dialogue management (DialogFlow, Luis, IBM Watson, for example), but some tools, such as Rasa, allow for a hybrid approach mixing frame based and probabilistic machine learning approaches.

So which is best? This often depends on the project and audience. For a healthcare chatbot you may have a very specific idea of the conversation path, and any machine learning approach that might mean the chatbot provides wrong information is a risk you don’t want to take. However, for a chatbot that’s promoting a new movie it may be less important to always provide a “correct” response and we can allow machine learning to make more generalised decisions with a focus on a more conversational experience.

Once the next conversation step or action is decided the chatbot needs to actually define a response to send to the user. This process is usually referred to as Natural Language Generation (NLG), and includes two typical approaches:

- Templated Responses: Here the human chatbot editor creates text templates for use in responses. These templates allow for dynamic placeholders for presentation of any information known to the chatbot (i.e. slots). Response templates can usually be defined against different channels, and can include interactive elements such as buttons, carousels and images/videos. This is the typical approach used across many tools in the marketplace.

- Machine Learning Response Generation: Much in the same way that the chatbot can be trained to understand user intent and extract entities using a machine learning approach, we can also train a chatbot to do the opposite, i.e. define a dynamic response based on training data (i.e. examples of humans doing the same). Again, this depends on how much control you are prepared to give to the machine learning algorithms.

Chatbot Memory

Much like humans, chatbots need to be able to remember things about the conversation, such as the user’s name or location. Chatbots typically use ‘slots’ to store this data throughout a conversation, allowing it to be used in decision making logic at a later stage, or repeated back to the user.

You can pretty much use a slot to store any data (within reason) - here’s a few examples of typical slots a chatbot might use:

- user_first_name: If the user says ‘my name is Sandy’ we could extract and store the value ‘Sandy’ to our user_name slot. We can then use the slot later when referring to the user. We may also know their first name if they are logged in and we have access to their account.

- payment_amount: For a chatbot that handles payments, we might need to ask for the amount the user wants to pay, and then store this to the slot payment_amount. We can then use the value later in an API call to actually trigger the payment. In addition we might need slots for payment_recipient and payment_date.

- channel: “Channel” refers to the method by which the user is interacting with the chatbot, such as via the website, on Facebook Messenger, Slack, over the phone, etc. We often use this slot to give different responses based on the current channel. For example, for the SMS channel you may want shorter answers, for the phone channel you need answers without any links, and for the website channel your answers can use all sorts of buttons and interactivity.

- user_id: Sometimes slots are used for things completely hidden from the user. If a user is logged in to the website and launches the chatbot, specific customer identifiers may be passed to the chatbot, allowing the chatbot to use these for API lookups or advanced logic.

Integration with Third Parties

Most chatbot platforms allow you integrate with third party services, either using a particular programming language (e.g. Python, JavaScript, etc) or predefined modules to handle the integration (no-code).

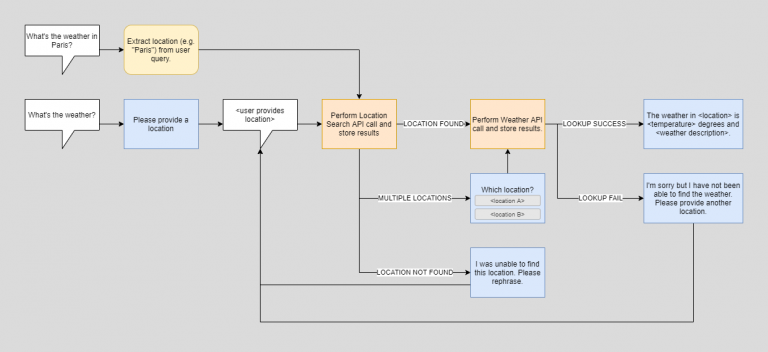

A useful example is a weather chatbot, where the user might ask “What is the weather in Paris?”. Here we might need to do two integrations:

- Google Location Search: To search the location of ‘Paris’ and get back coordinates.

- Weather Lookup: Pass coordinates and get back current weather, temperature, etc

We also need to consider what to do if we do not find the location, or if multiple locations are found with the name ‘Paris’ (there’s one in the United States as well as France). It can get complex fast...

In conclusion…

Chatbots are not magical AI beings that learn like humans. They can’t respond relevantly to every user utterance and they will often fail on what seems like the simplest question to a human. They are logical systems and will only understand what a human editor tells them to understand.

If you’re planning to build a chatbot you need to be realistic about your expectations, and make sure you focus on the things your user’s really want to talk about. This often means performing analysis on user questions in other channels (emails, live chat, site search, facebook queries, etc.) and defining a robust set of intents (or ‘questions’) you expect users to ask. If you get this step wrong you may end up wasting significant time and effort creating content for questions your users never ask.

You also need to think about what chatbot platform to use, and whether it supports your long term goals. Most chatbot platforms provide a way to answer simple questions with an faq-style canned response, but can it support complex conversational structures combining bot responses, script logic, multiple integrations, context handling, objectives and proactivity. Good chatbots get complex pretty quickly, so you need to plan for where your chatbot might be in a year's time, and what tools you will need to support it.